Abiola Adeshina

AI Agent Infrastructure Engineer · Systems Architect · Open Source Creator

I'm a software engineer from Nigeria, obsessed with building the infrastructure layer that powers autonomous AI systems.

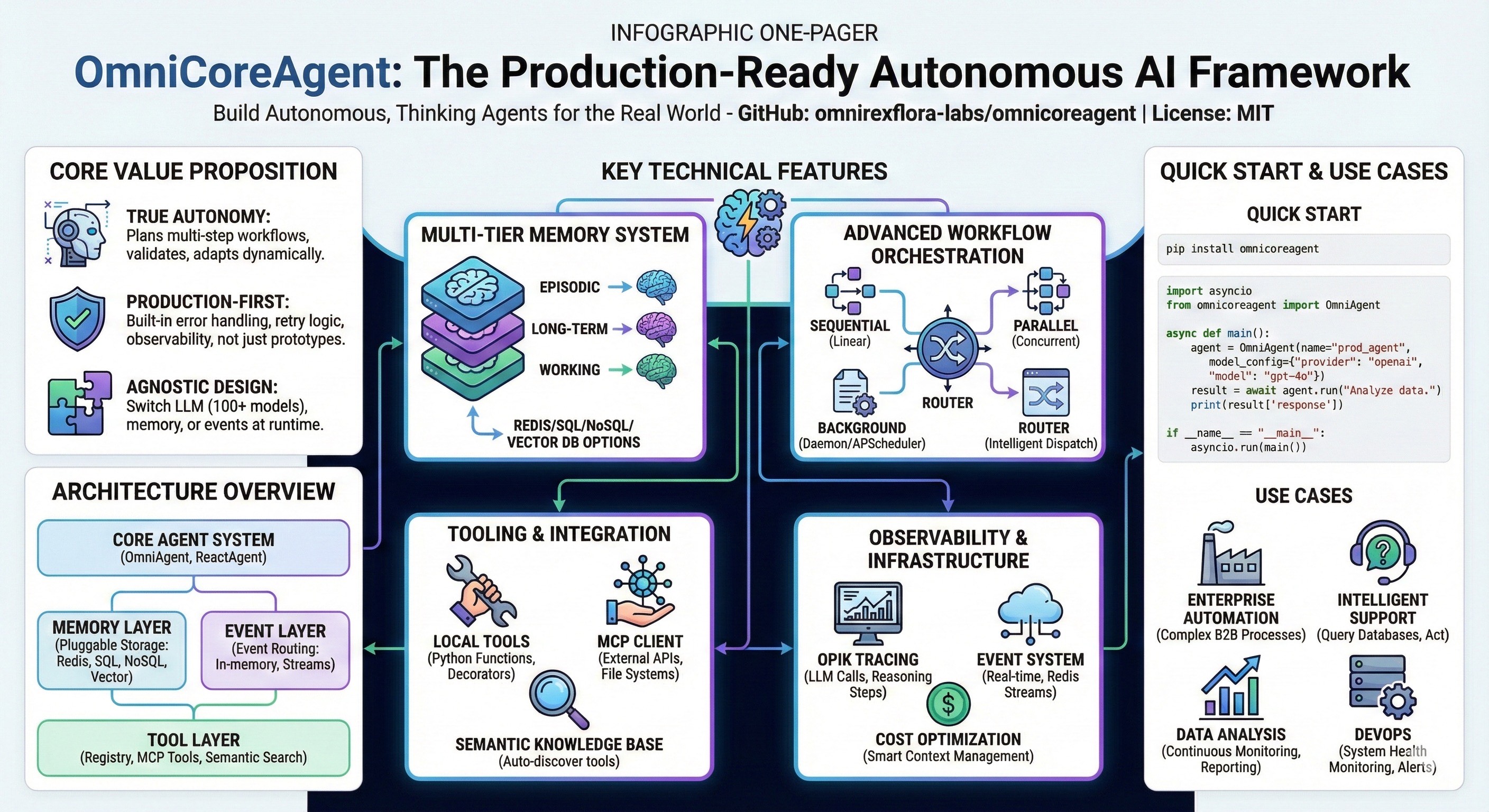

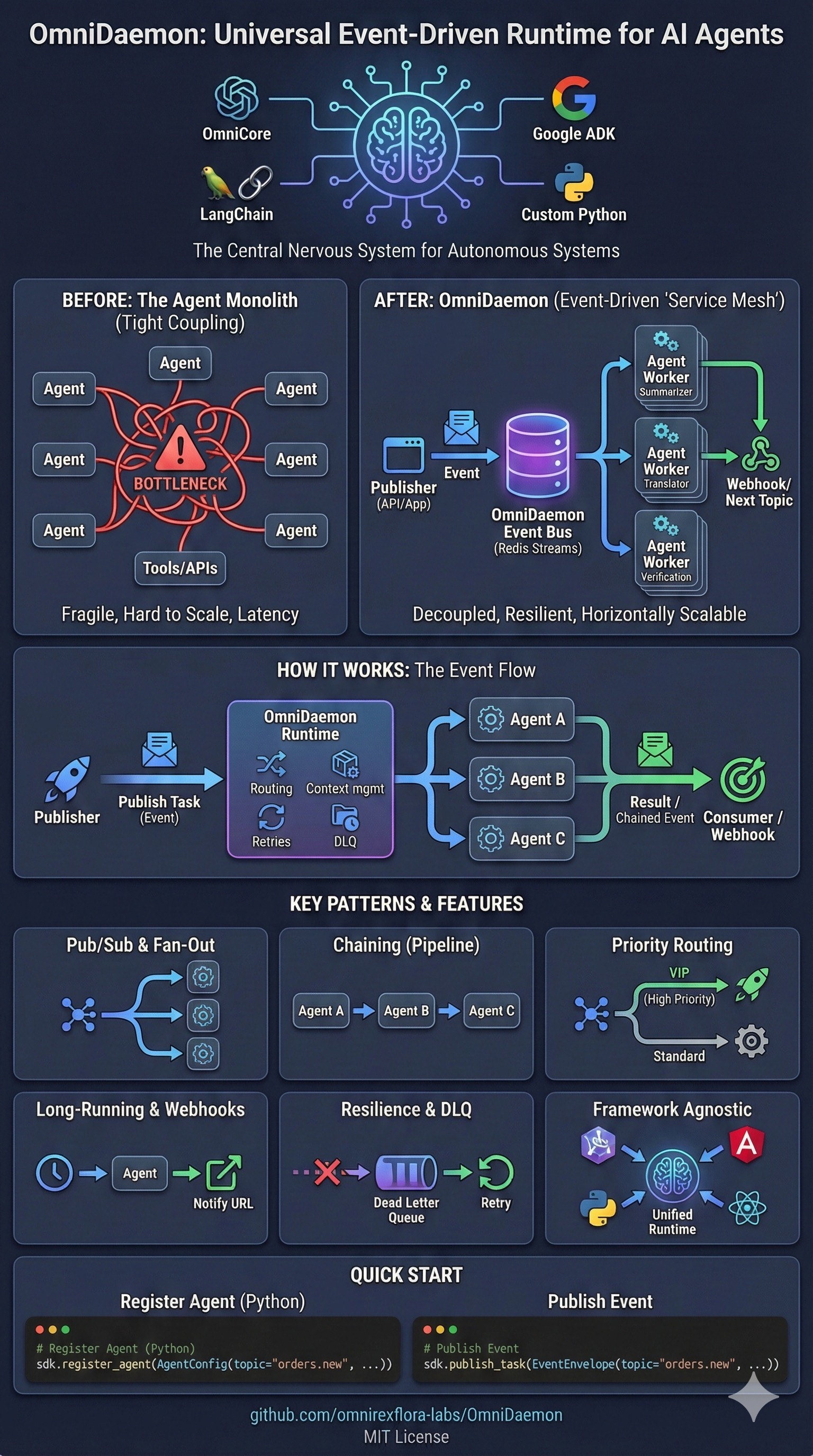

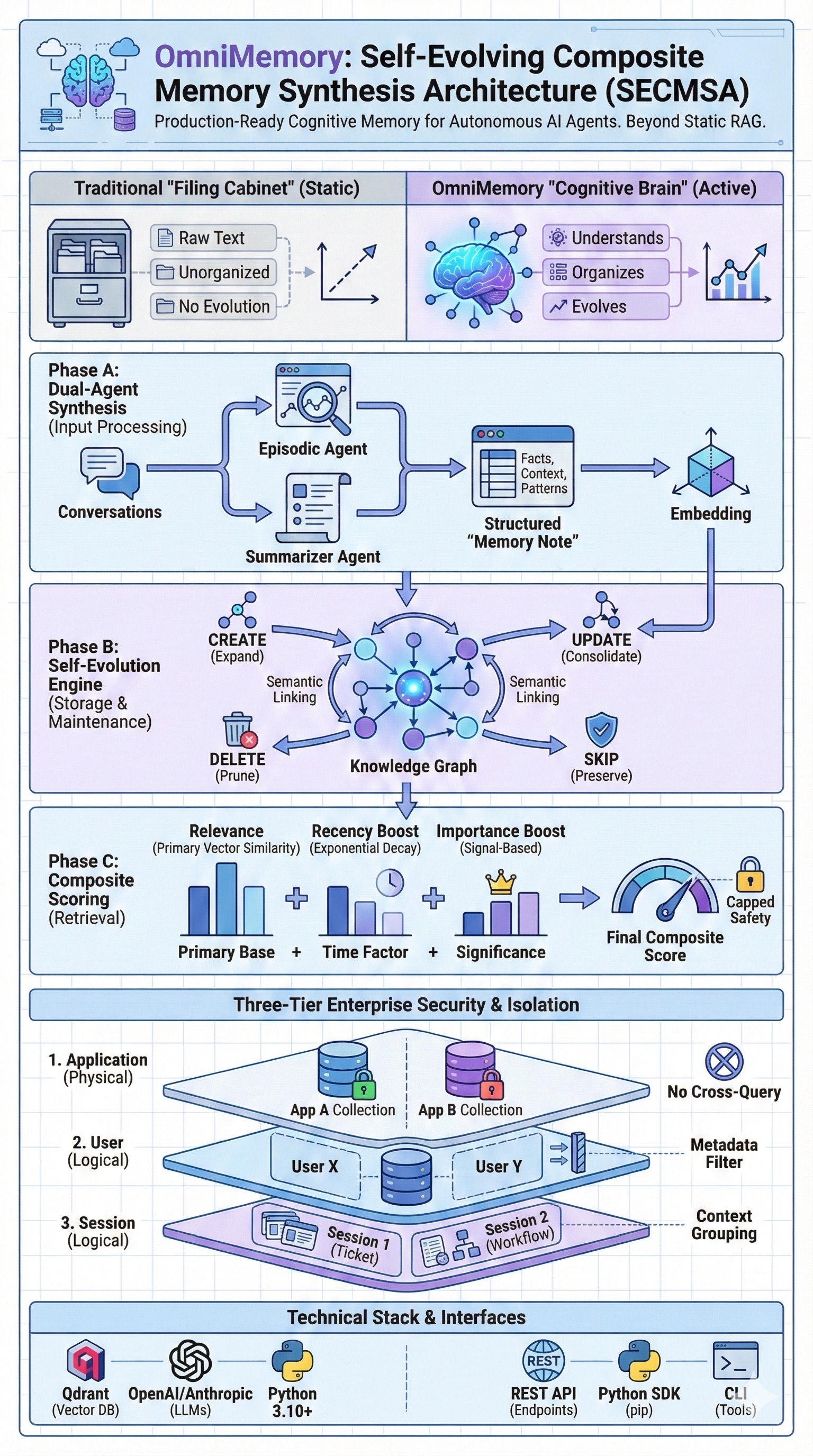

While others import frameworks, I architect and ship complete systems from scratch: custom memory synthesis engines, event-driven runtimes, and agent orchestration frameworks. Every component. Every line. Built solo.